B6A Adds Optical Character Recognition To Its Media Analysis Platform

BY ADAM GROSSMAN, ALEX CORDOVER, ALBERTIO RIOS, & JOSH HERZBERG

Block Six Analytics (B6A) is pleased to announce the addition of optical character recognition (OCR) capability to our Ensemble Training Process (ETP) within our Media Analysis Platform. Adding OCR to our best-in-class logo detection in MAP increases the overall accuracy and speed needed to identify the time, location, prevalence, and value of images in videos and photos for specific brands for specific activations.

“Our proprietary approach to training solves critical challenges that have impacted the accuracy and speed of object identification,” said CEO Adam Grossman. “By adding text-based analysis to our process, B6A's clients receive better results than human or image detection alone.”

Using OCR enables B6A to add detection and character recognition for identifying logos that are primarily text based. In the past, machine learning platforms have had difficulty capturing text logos because they have different features than image-based logos. In particular, letters look very similar which makes them easy to read but hard for a machine to differentiate.

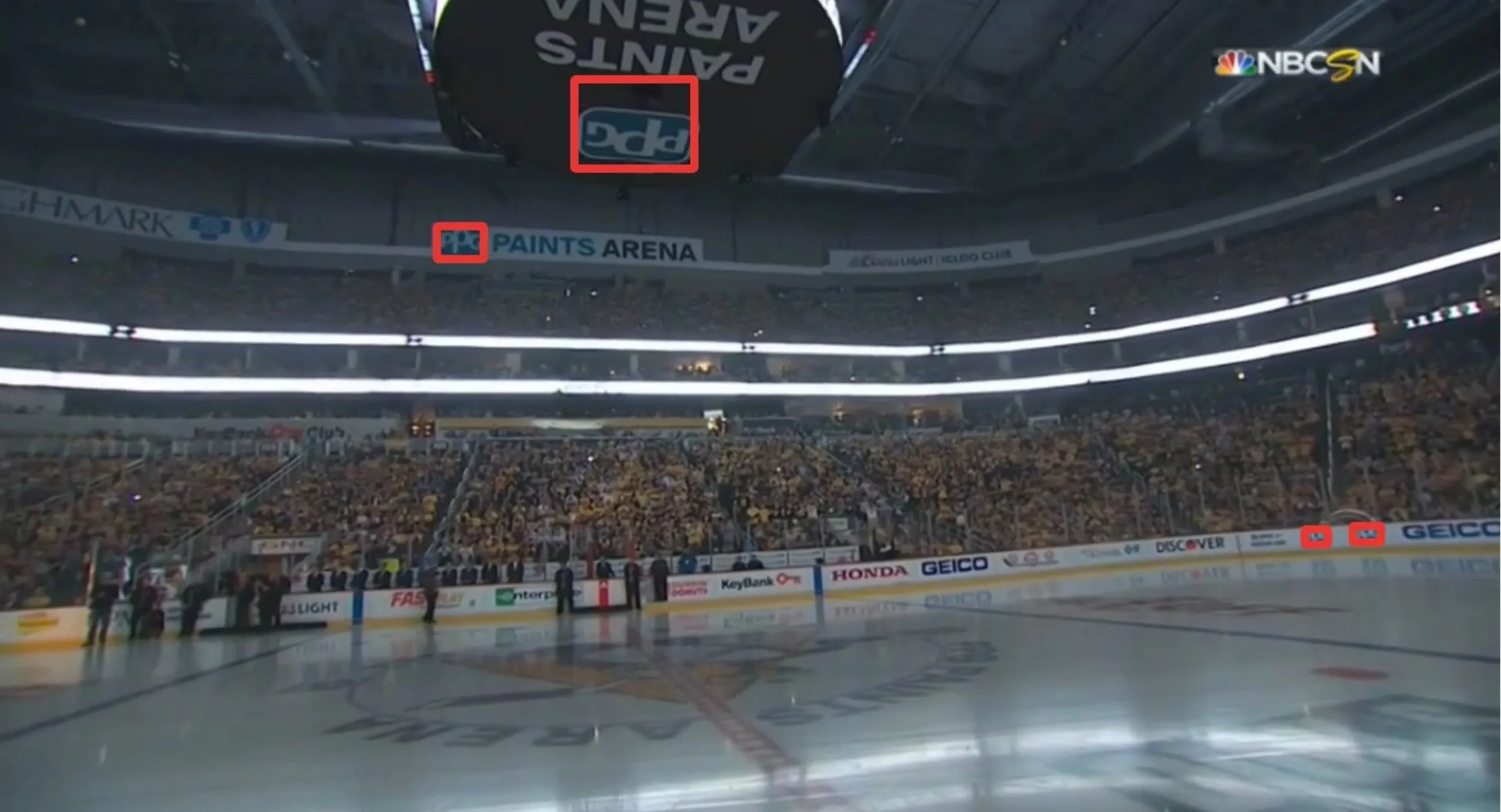

B6A’s proprietary approach enables us to identify and treat these logos as though they were text so we can use natural language processing (NLP). Our system is able to determine unique words by looking at the distance between sequences of letters in an object. For example, the object below shows how our system recognizes “PPG” because it can identify the “P” and determine how far it is from the “P”, and “G”. Each word has different combinations of distances, and the system uses this as the way to identify an object in a video. We then use our proprietary algorithms to both layer in core visual metrics (centricity, prevalence, time, and clarity) and calculate value.

Text analysis does not work with every logo. For image based logos, the problem with training a system is image collection. In particular, it is necessary to collect hundreds, if not thousands, of images in a similar environment to where the object appears in the video. B6A’s proprietary image synthesizer is used to automate this process, requiring only 10-20 original images and then synthesizing the remaining images needed to complete the training set. By streamlining the process to create training data, MAP can be trained on a new logo and deliver results in as little as 24 hours.

Once MAP is trained, it can fully process games / events and produce results in the same day on which the game or event occurred. B6A’s system can track images in situations that were difficult to analyze before. These include:

- Having a consistent approach to image occlusion. ETP combined with deep learning enables MAP to automatically determine if a logo / image is clear to a human viewer depending on how much of the image is blocked.

- Identifying images with fast camera movement. For example, the TV camera pans up and down the ice during an NHL game. This situation has been difficult for machine learning systems to capture in the past. ETP dramatically reduces this as a problem enabling MAP to identify and value images in this type of video clip.

Combining both text and image analysis can increase the accuracy of detecting an object when it contains both elements. Because B6A’s ETP uses image-based, text-based, or a combination of both approaches in MAP, we are able to process videos quickly and accurately using the latest advances in object identification. For more information about MAP and ETP, contact Block Six Analytics.